In the field of computer security, security information and event management (SIEM) software products and services combine security information management (SIM) and security event management (SEM). They provide real-time analysis of security alerts generated by applications and network hardware.

Vendors sell SIEM as on-premise software or appliances but also as managed services, or cloud-based instances; these products are also used to log security data and generate reports for compliance purposes.[1]

Overview

The acronyms SEM, SIM and SIEM have been sometimes used interchangeably.[2]

The segment of security management that deals with real-time monitoring, correlation of events, notifications and console views is known as security event management (SEM).

The second area provides long-term storage as well as analysis, manipulation and reporting of log data and security records of the type collated by SEM software, and is known as security information management (SIM).[3]

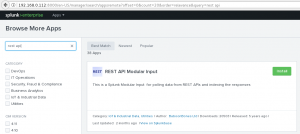

As with many meanings and definitions of capabilities, evolving requirements continually shape derivatives of SIEM product-categories. Organizations are turning to big data platforms, such as Apache Hadoop, to complement SIEM capabilities by extending data storage capacity and analytic flexibility.[4][5]

Advanced SIEMs have evolved to include user and entity behavior analytics (UEBA) and security orchestration and automated response (SOAR).

The term security information event management (SIEM), coined by Mark Nicolett and Amrit Williams of Gartner in 2005 describes,[6]

- the product capabilities of gathering, analyzing and presenting information from network and security devices

- identity and access-management applications

- vulnerability management and policy-compliance tools

- operating-system, database and application logs

- external threat data

A key focus is to monitor and help manage user and service privileges, directory services and other system-configuration changes; as well as providing log auditing and review and incident response.[3]

Capabilities/Components

- Data aggregation: Log management aggregates data from many sources, including network, security, servers, databases, applications, providing the ability to consolidate monitored data to help avoid missing crucial events.

- Correlation: looks for common attributes, and links events together into meaningful bundles. This technology provides the ability to perform a variety of correlation techniques to integrate different sources, in order to turn data into useful information. Correlation is typically a function of the Security Event Management portion of a full SIEM solution[7]

- Alerting: the automated analysis of correlated events and production of alerts, to notify recipients of immediate issues. Alerting can be to a dashboard, or sent via third party channels such as email.

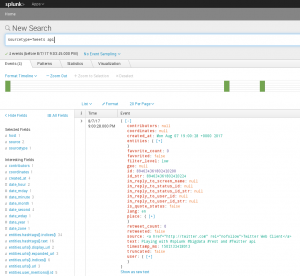

- Dashboards: Tools can take event data and turn it into informational charts to assist in seeing patterns, or identifying activity that is not forming a standard pattern.[8]

- Compliance: Applications can be employed to automate the gathering of compliance data, producing reports that adapt to existing security, governance and auditing processes.[9]

- Retention: employing long-term storage of historical data to facilitate correlation of data over time, and to provide the retention necessary for compliance requirements. Long term log data retention is critical in forensic investigations as it is unlikely that discovery of a network breach will be at the time of the breach occurring.[10]

- Forensic analysis: The ability to search across logs on different nodes and time periods based on specific criteria. This mitigates having to aggregate log information in your head or having to search through thousands and thousands of logs.[9]

Usage cases

Computer security researcher Chris Kubecka at the hacking conference 28C3 Chaos Communication Congress successful SIEM use cases.[11]

- SIEM visibility and anomaly detection could help detect Zero-day (computing) or Computer_virus#Polymorphic_code. Primarily due to low rates of anti-virus detection rates against this type of rapidly changing type of malware.

- Automatic parsing, log normalization and categorization can occur automatically. Regardless of the type of computer or network device as long as it can send a log.

- Visualization with a SIEM using security events and log failures can aid in pattern detection.

- Protocol anomalies which can indicate a mis-configuration or a security issue can be identified with a SIEM using pattern detection, alerting, baseline and dashboards.

- SIEMS can detect covert, malicious communications and encrypted channels.

- Cyberwarfare can be detected by SIEMs with accuracy, discovering both attackers and victims.

Today, most SIEM systems work by deploying multiple collection agents in a hierarchical manner to gather security-related events from end-user devices, servers, network equipment, as well as specialized security equipment like firewalls, antivirus or intrusion prevention systems. The collectors forward events to a centralized management console where security analysts sift through the noise, connecting the dots and prioritizing security incidents.

In some systems, pre-processing may happen at edge collectors, with only certain events being passed through to a centralized management node. In this way, the volume of information being communicated and stored can be reduced. Although advancements in machine learning are helping systems to flag anomalies more accurately, analysts must still provide feedback, continuously educating the system about the environment.

Here are some of the most important features to review when evaluating SIEM products:

- Integration with other controls – Can the system give commands to other enterprise security controls to prevent or stop attacks in progress?

- Artificial intelligence – Can the system improve its own accuracy by through machine and deep learning?

- Threat intelligence feeds – Can the system support threat intelligence feeds of the organization’s choosing or is it mandated to use a particular feed?

- Robust compliance reporting – Does the system include built-in reports for common compliance needs and the provide the organization with the ability to customize or create new reports?

- Forensics capabilities – Can the system capture additional information about security events by recording the headers and contents of packets of interest?

Pronunciation

The SIEM acronym is alternately pronounced SEEM or SIM (with a silent e).